Difference between revisions of "Apache Kafka integration"

(→Overview) |

(→Overview) |

||

| Line 13: | Line 13: | ||

It consists of a generalised ‘producer’ interface capable of publishing any information to definable Kafka topics, and a collection of ‘handlers’ for managing specific events. | It consists of a generalised ‘producer’ interface capable of publishing any information to definable Kafka topics, and a collection of ‘handlers’ for managing specific events. | ||

| − | At present there is only one event handler [[#Structure Notification|Structure Notification]] which publishes changes to any structures. | + | At present there is only one event handler [[#Structure Notification|Structure Notification]] which publishes changes to any structures as they occur. |

For changes or modifications to structures, the body of the message is always an SDMX structure message. The format is configurable at the event handler level with choices including: | For changes or modifications to structures, the body of the message is always an SDMX structure message. The format is configurable at the event handler level with choices including: | ||

* SDMX-ML (1.0, 2.0 and 2.1) | * SDMX-ML (1.0, 2.0 and 2.1) | ||

Revision as of 08:51, 7 February 2020

Contents

- 1 Compatibility

- 2 Overview

- 3 Configuration

- 4 General Behaviour

- 5 Events

- 5.1 Structure Notification

- 5.1.1 Kafka Message Key

- 5.1.2 Additions and Modifications

- 5.1.3 Deletions

- 5.1.4 Kafka Transactions

- 5.1.5 Full Content Publication and Re-synchronisation

- 5.1.6 Not Supported: Subscription to Specific Structures

- 5.1.7 Not Supported: Publication Arbitration on Fusion Registry Load Balanced Clusters

- 5.1.8 Not Supported: Enforcement of Fusion Registry Content Security Rules to Structures Published on Kafka

- 5.1 Structure Notification

Compatibility

| Product | Module | Version | Support |

|---|---|---|---|

| Fusion Registry Enterprise Edition | Core | 10.0 | Kafka producer supporting 'Structure Notification' events |

Overview

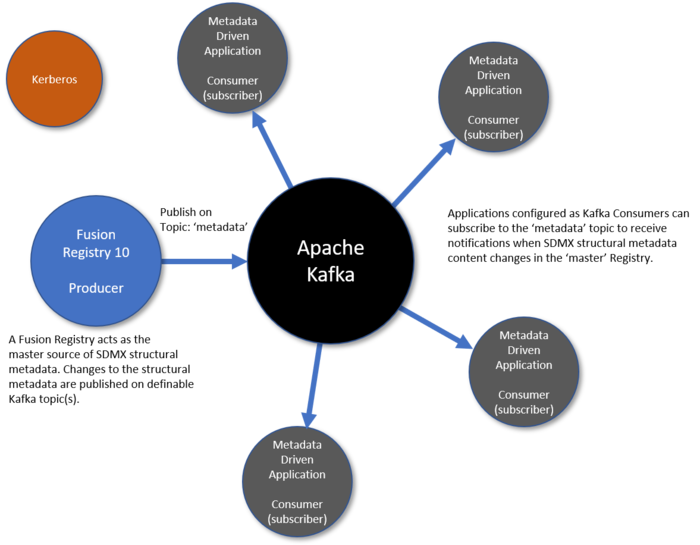

Fusion Registry can act as an Apache Kafka Producer where specified events are published on definable Kafka topics. It consists of a generalised ‘producer’ interface capable of publishing any information to definable Kafka topics, and a collection of ‘handlers’ for managing specific events.

At present there is only one event handler Structure Notification which publishes changes to any structures as they occur. For changes or modifications to structures, the body of the message is always an SDMX structure message. The format is configurable at the event handler level with choices including:

- SDMX-ML (1.0, 2.0 and 2.1)

- SDMX-JSON

- EDI

- Excel

- Fusion JSON (the JSON dialect that pre-dated the formal SDMX-JSON specification)

A ‘tombstone’ message is used for structure deletions with the structure URN in the Kafka Message Key, and a null body.

The list of supported events that can be published to Kafka will be extended over time.

Other events envisaged include:

- Audit events such as logins, data registrations and API calls

- Errors, for instance where a scheduled data import fails

- Configuration changes

- Changes to Content Security Rules

A Fusion Registry instance can connect to only one Kafka broker service.

Configuration

Configuration is performed through the GUI with 'admin' privileges.

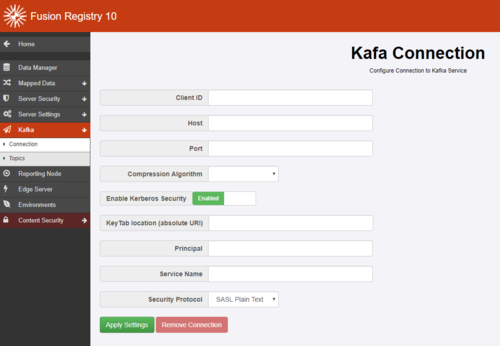

Connection

The Connection form configures the parameters needed to connect to the Kafka broker service.

| Paramater | Value |

|---|---|

| Client ID | A unique identifier for the Fusion Registry client. There's no real restrictions. |

| Host | Hostname or IP address of the Kafka Service as defined by the Kafka administrator |

| Port | Port number of the Kafka service |

| Comression Algorithm | The algorithm to compress the payload. Choices are: None, GZIP, Snappy, LZ4. |

| Enable Kerberos Security | If Enabled,the Producer attempts to authenticate with the specified Kerberos service for access to Kafka |

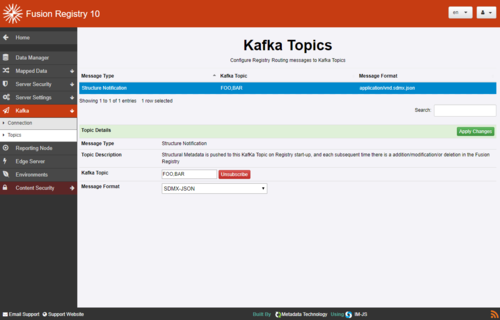

Topics

The Topics form allows configuration of which events should be published on Kafka, and on what Kafka Topics.

Kafka Topic is ID of the topic on which to publish.

Each message can be published onto multiple topics by providing a comma separated list of topic names.

So 'FOO' publishes on just the single topic specified.

'FOO,BAR' publishes on both FOO and BAR topics.

General Behaviour

Publication Reliability

Message publication is not 100% reliable.

Fusion Registry places event messages as they are created onto an internal in-memory Staging Queue. When a message is created, an immediate attempt is made to publish to the Kafka broker cluster. If the publication fails (the Kafka service is unavailable, for instance), the message is returned to the Queue. An independent Queue Processor thread makes periodic attempts to complete the publication of staged messages.

IMPORTANT: Messages stay on the Staging Queue until they are successfully published to Kafka. However, the Staging Queue is not persistent so any staged but unpublished messages will be lost when the Fusion Registry service terminates.

Each individual event message service manages the risk of message loss in a way appropriate for their specific use case. For Structure Notification, the risk is managed by forcing consumers to re-synchronise their complete structural metadata content with the Registry on Registry startup. Even if structure change event messages were lost, Consumers' metadata is reset to a consistent state.

Events

Fusion Registry events available for publication on a Kafka service.

Structure Notification

SDMX Structural Metadata is published to definable Kafka topics on Fusion Registry startup and each time structures are added / modified, or deleted. Metadata-driven applications can subscribe to the relevant topic(s) to receive notifications of changes to structures allowing them to maintain an up-to-date replica copy of the structural metadata they need.

Example use cases include:

- Structural validation applications which require up-to-date structural metadata such as Codelists and DSDs to check whether data is correctly structured.

- Structure mapping applications which require up-to-date copies of relevant Structure Sets and maps to perform data transformations.

Kafka Message Key

The SDMX Structure URN is used as the Kafka Message Key. Consumers must therefore be prepared to receive and interpret the key as the Structure URN correctly when processing the message. This is particularly important for structure deletion where the Message Key is the only source of information about which structure is being referred to.

Additions and Modifications

Addition and Modification of structures both result in an SDMX 'replace' message containing the full content of the structures. Deltas (such as the addition of a Code to a Codelist) are not supported.

Deletions

Deletion of structures results in a 'tombstone' message, i.e. one with null payload but the URN of the deleted structure in the Message Key.

Kafka Transactions

The addition and / or modification of a number of structures in a single Registry process are encapsulated into a Kafka Transaction, as when processing an SDMX-ML message containing more than one structure.

Structure deletions are never mixed with additions / modifications in the same transaction. This reflects the behaviour of the SDMX REST API whereby additions / modifications use HTTP POST, while deletions require HTTP DELETE.

Full Content Publication and Re-synchronisation

Under certain conditions, the Fusion Registry Producer will publish all of its structures in a single SDMX Structure Message of the chosen format to Kafka.

These are:

- When a new Kafka connection is set up, or the configuration of an existing Kafka connection is changed

- Registry startup

The principle here is to ensure that Consumers have a consistent baseline against which to process subsequent change notifications.

It also mitigates the risk that changes held in the Registry's in-memory queue of messages awaiting Kafka publication were lost on shutdown by forcing Consumers to re-synchronise on Registry startup.

Not Supported: Subscription to Specific Structures

The current implementation does not allow Kafka Consumers to subscribe to changes on specific structures or sets of structures, such as those maintained by a particular Agency. Consumers subscribing to the chosen topic will receive information about all structures and will need to select what they need.

Not Supported: Publication Arbitration on Fusion Registry Load Balanced Clusters

IMPORTANT: There is currently no arbitration mechanism between peer Fusion Registry instances configured in a Load Balanced cluster. This means that each member of the cluster independently publishes each structure change notification to Kafka, resulting in duplication of notifications.

Structure changes made on one instance of Fusion Registry in a cluster are replicated to all of the others through either a shared database polling mechanism, or by Rabbit MQ messaging. An instance receiving information that structures have changed elsewhere in the cluster updates its in-memory SDMX information model accordingly. The update event is however trapped by its Kafka notification processor and published as normal.

Changes are required to the Kafka event processing behaviour of Registries operating in a cluster to arbitrate over which instance publishes the notification on Kafka such that a structure change is notified once and only once, as expected.

Not Supported: Enforcement of Fusion Registry Content Security Rules to Structures Published on Kafka

Fusion Registry Content Security defines rules restricting access to selected structures and items to specific groups.

The Kafka Structure Notification processor does not enforce Content Security structure rules meaning that all structures are published to the Kafka broker service on the defined topic(s), irrespective of what restrictions may be in place. The risk here is that people or applications that would otherwise not have access to specific structures may be able circumvent the rules normally imposed by Fusion Registry's Content Security sub-system by subscribing to the Structure Notification topic on Kafka. Kafa Streams Security allows control over access to topics. While an all-or-nothing approach of limiting access to all published structures may be sufficient for some applications, it doesn't solve the use case where a consumer should be allowed access to certain structures, but not others.

The Kafka publish-and-subscribe mechanism means that the Fusion Registry producer has no knowledge of who will be consuming the metadata at the point of publication. Up-front decisions therefore have to be made as to what rules to apply when publishing to Kafa. Options include:

- Only publish 'public' structures - i.e. those without any rules restricting access

- Publish changes for each Content Security group (and 'public') on their own separate Kafka topics - applying Kafka Security to the topics would then ensure the intended access restrications are enforced